What is the use case for AI-generated video in L&D?

I'm genuinely asking...

As I’ve written previously, ‘talking head’ videos are the default format for a lot of digital-learning content on the internet.

When I tell people I ‘design online courses’ for a living (an incomplete but user-friendly description of my job), they usually stare at me blankly, then change the topic.

On the rare occasion someone asks a follow-up question, it will often be along the lines of — ‘Do you create the videos yourself?’

Out in the real world, beyond the walls of L&D, online courses don’t involve flip cards, multiple-choice questions, branching scenarios, or assessments. They’re a playlist of videos, delivered by a charismatic instructor, accompanied by downloadable PDFs.

One of the reasons these videos are ubiquitous on consumer-learning platforms is that they replicate the experience of formal education. If you associate learning with attending traditional ‘stand and deliver’ lectures in school or university, then you’ll naturally expect a similar experience from digital learning.

Another reason for the popularity of video is that video is… popular! People like it. It requires minimal effort, and it saves learners from reading all that boring text — yuck!

Of course, the problem with replicating the traditional lecture digitally is that the traditional lecture isn’t all that effective at helping people learn. (See the ‘Deep dive’ below for more on this.)

What ‘talking head’ videos can do effectively, in my opinion — and what we tend to use them for on the Mind Tools Custom team — is build an emotional connection with the viewer.

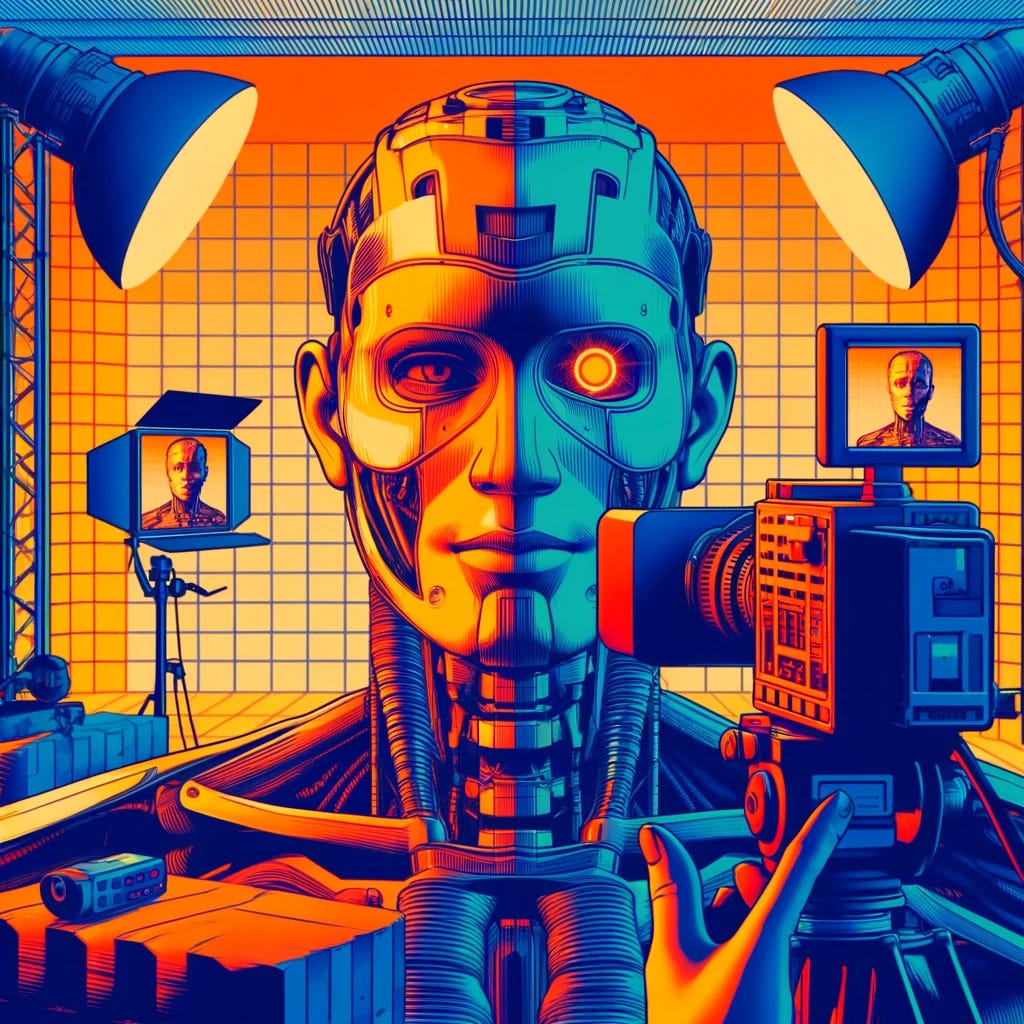

This is what makes the rise of AI-generated video in L&D slightly puzzling to me. By which I mean, I genuinely can’t see when I would ever choose to use it as a learning designer.

For those unfamiliar, the concept is simple: write a script, upload it to the platform of your choice, select a humanoid avatar, export your video. No need for expensive camera equipment, lighting, microphones, or actors.

In my opinion, there are a few major problems with the current state of the art:

🤪 AI-generated videos are… weird

While the ability to generate synthetic videos with a few keystrokes is a marvel of modern technology, the output is slightly unsettling. It’s all just a little ‘uncanny valley’ for my taste.

The technology is at this strange crossroads, where AI avatars look like real people, but don’t yet act like them. Their faces are stiff, their eyes are cold, and their voices are usually out-of-sync with their lips.

The effect of this is that, whenever I watch an AI-generated video, I usually find myself focusing on the general weirdness of the experience, rather than the content of the message.

These issues will undoubtedly be resolved as the technology improves, but we’re not yet at a point where AI video is indistinguishable from real video. And if we were, we’d just have a different set of problems to contend with.

🤖 AI-generated videos are inherently inhuman

As I mentioned above, our team typically uses ‘talking head’ videos to engage learners’ emotions.

For one client project, we created videos to support an onboarding program, featuring colleagues talking about their experience at the company. We even included a blooper reel to convey the organization’s personality and culture.

The value of these videos came from the fact that they were based around real stories, told by real people, with real emotion. The content of the videos was important, but arguably less important than the effect of seeing and hearing from recognizable colleagues in the organization.

Unless we start to view AI avatars as colleagues, synthetic video will never be able to replicate this effect.

🧐 AI-generated videos lack credibility

I started this article by obliquely referencing video-based learning platforms like Masterclass and BBC Maestro. What these platforms offer is not just great content — it’s great content delivered by an expert.

Want to learn photography? Here’s Annie Leibovitz. Want to learn music production? Mark Ronson will teach you.

Putting aside the question of whether experts make good teachers, there’s no denying that Annie Leibovitz and Mark Ronson know what they’re talking about.

The same cannot be said for AI avatars.

And if you know your video tutorial is being delivered by an AI, how can you be sure what it’s telling you isn’t just a hallucination?

💩 AI-generated videos send the wrong signal to learners

One of the key selling points of AI-video tools is that they allow L&D teams to save time and money. And I can see why that might be compelling. Producing high-quality videos can be resource-intensive.

But what signal does this send to learners?

Let’s imagine we’ve created a custom AI avatar, trained on video clips of our client’s CEO. The CEO is extremely busy, but we’d love to include a video of them in our onboarding program. So, what do we do?

We can either:

invest time and money to film the real CEO;

generate a video of the avatar CEO using AI.

In my view, Option 1 signals to the learner that the organization’s leadership is genuinely invested in its employees. Option 2 suggests that the CEO has more important things to worry about.

If Option 1 really is off the table, I’d honestly sooner go with secret Option 3 — forget about the video — than Option 2.

I’m willing to accept that I might be completely wrong about AI-generated videos, and that there really are legitimate use cases out there. But as things stand, I can’t think of any.

Are you using AI-generated video in your programs? If so, I’d love to hear about your experience. Get in touch by emailing custom@mindtools.com or reply to this newsletter from your inbox.

🎧 On the podcast

Whether you’re delivering workshops, speaking at conferences, presenting to senior leaders, or even hosting L&D’s favorite podcast, public speaking is a critical skill for learning professionals. So how do you develop this skill, and how do you manage your nerves when speaking publicly?

In last week’s episode of The Mind Tools L&D Podcast, Lara and I were joined by Samantha Tulloch, public speaker and business-transformation consultant. We discussed the nuances of public speaking in an L&D context, and techniques that can help you prepare and deliver effectively.

Check out the episode below. 👇

You can subscribe to the podcast on iTunes, Spotify or the podcast page of our website. Want to share your thoughts? Get in touch @RossDickieMT, @RossGarnerMT or #MindToolsPodcast

📖 Deep dive

Earlier in this week’s Dispatch, I referenced the drawbacks of the traditional ‘stand and deliver’ lecture, which a lot of video-based learning content seeks to emulate.

In a 2014 meta-analysis of 225 studies into undergraduate STEM teaching methods, researchers from the University of Washington found that students in classes with traditional methods were 1.5 times more likely to fail than students in classes that used ‘active learning’ methods. On average, active methods also resulted in a 6% improvement in examination scores.

The authors write:

‘Although traditional lecturing has dominated undergraduate instruction for most of a millennium and continues to have strong advocates, current evidence suggests that a constructivist “ask, don’t tell” approach may lead to strong increases in student performance.’

While we should be cautious when applying these findings to the design of workplace-learning materials, we should also think twice before rushing to replicate traditional methods of instruction through new technologies.

Freeman, S. et al. (2014). ‘Active Learning Increases Student Performance in Science, Engineering, and Mathematics.’ PNAS, 111, 8410-8415.

👹 Missing links

🦾 How Should I Be Using AI Right Now?

I recently cancelled my ChatGPT Plus subscription, but this podcast has made me reconsider that decision. Hosted by my crush, Ezra Klein, and featuring my go-to source for all things AI, Ethan Mollick, the episode offers practical guidance on how to get the most out of LLMs, and what we can (and cannot) reasonably expect the current models to help us achieve in and out of work.

🍦 The soft life: why millennials are quitting the rat race

Rose Gardner (not to be confused with Ross Garner) had all the trappings of a successful life: a degree from a top university, a good job, and a flat she owned in London. In spite of this, she felt trapped — forced to stay in a job she hated to pay her mortgage. So she packed it in, sold her flat, and chose the ‘soft life’, eschewing all the things she was supposed to want in favour of a simpler lifestyle. According to this Guardian article, she is one of a growing number of millennials who are opting out of the rat race.

🐈 Library Fees? No Problem. Just Show Us Your Cat Photos.

A library in Massachusetts has offered to fur-give borrowers who have lost or damaged books, then never gone back to avoid paying a fine. In exchange for a photograph, drawing, or magazine clipping of a cat, these miscreants can have their debts forgiven and their library cards reactivated.

👋 And finally…

While we’re on the topic of cats, regular Dispatch readers will be painfully aware that my wife and I recently adopted two kittens — one of whom bears a striking resemblance to OwlKitty. They make me feel like Kenough.

👍 Thanks!

Thanks for reading The L&D Dispatch from Mind Tools! If you’d like to speak to us, work with us, or make a suggestion, you can email custom@mindtools.com.

Or just hit reply to this email!

Hey here’s a thing! If you’ve reached all the way to the end of this newsletter, then you must really love it!

Why not share that love by hitting the button below, or just forward it to a friend?